High-dynamic range (HDR) technology has become a key part of modern display equipment. Whether it’s one of the best TVs, or a great gaming monitor, HDR can transform the quality of the image you are looking at. If you’re in the market for a new screen of some sort, chances are, HDR will be mentioned somewhere in the spec sheet.

As a technology, HDR has been around for about a decade, with Dolby Vision being the first iteration back in 2014. Since then, further refinements and improvements have seen several variations of HDR appear, and the use of HDR has gone far beyond expensive televisions.

Monitor HDR means that you will experience improved contrast and more realistic, vibrant colours, creating a more detailed picture on your display. But while the basic principles of HDR remain constant, different HDR formats and various levels of certification mean that not every monitor with HDR will look the same. So, what are the differences, and why does it matter?

What is HDR?

As we mentioned above, HDR stands for High Dynamic Range, and it describes the difference – or range – between the darkest and lightest parts of the image displayed on your monitor. It also allows for greater variation in colour and tone, creating a richer, more vibrant palette that looks more realistic to the human eye. It also offers far greater detail in an image than you'd get on a Standard Dynamic Range (SDR) display.

As well as being useful in surgical settings, HDR is popular for watching films, gaming, and also in the creation and editing of photo and video content. For anyone who works in content creation, a good colour grading monitor can be an essential component – and HDR is critical to help deliver precise contrast adjustments during the colour grading process.

How Does HDR Work?

Every pixel in your monitor displays colour by blending the three primary colours – blue, green, and red. The intensity of each colour can be specified by its “bit depth” (essentially, the amount of data – or bits – used to dictate the brightness of each pixel). A standard image will usually be 8-bit, while HDR images will have a bit depth of 10, 12, or potentially even 16-bit.

The bit depth dictates the “bits per pixel” – which tells us the total number of colour and brightness variations available at each pixel. For example, an 8-bit channel allows for a maximum of 256 shades of each primary colour. 10-bits offer 1024 different shades, 12-bit offers 4096, and 16-bit a massive 65536. An SDR display typically offers a contrast ratio of 1200-to-1. An HDR display can achieve contrast ratios of over 200,000-to-1.

In other words, increasing the bit depth of each pixel can exponentially increase the number of variations in colour and brightness available on your monitor, leading to significantly more control over the image. This is what leads to enhanced contrast, more detail, and more accurate colours.

What about colour depth and lighting?

Regarding HDR, it’s only as good as the technology underpinning it. In other words, HDR requires both good-quality HDR content and a good-quality monitor to display it. This requires three main features: wide colour gamut, local dimming, and a high peak brightness.

Technically, HDR specifically refers to a display's brightness rather than the range of colours it can produce. Wide colour gamut (WCG) is, therefore, often seen in tandem with HDR. It allows the monitor to display a wider palette of colours—richer and deeper shades, for example – than is available on a standard RGB (sRGB) display. The latest colour gamut technology is up to 72 per cent larger than sRGB offers.

Local dimming refers to the way a monitor is backlit. There are three main types of backlighting: Edge-Lit, Direct-Lit, and Full Array. Edge-lit displays have lights around the edge of the screen. One form of Edge-lit dimming is known as Global Dimming, where a string of LEDs is found on just one edge of the LCD panel, and controls the brightness across the entire screen. Direct-Lit monitors have rows of LEDs spread across the rear of the display, which allows for slightly more control over brightness in different parts of the screen.

Full Array monitors divide the screen into zones and place more LEDS across the rear than Direct-Lit monitors. By having more LEDs, it allows for greater control over light and dark areas of the display, through what’s known as local dimming. A Full Array monitor will generally produce a higher quality image than Edge-Lit or Direct-Lit displays.

Peak brightness refers to how bright your monitor or television can go. The higher the peak brightness, the brighter the brightest parts of the picture can get, allowing for greater contrast as a result. Brightness is usually measured in nits, and to get the most from HDR, you’ll want a display that can hit at least 1000 nits (for contrast, a traditional LCD display might offer somewhere between 200-500 nits at peak brightness).

Types of HDR in monitors

HDR comes in a few different formats, each of which has its own unique qualities:

HDR10

HDR10 is arguably the simplest HDR format. It’s a technical standard which has defined ranges and specifications for any content or displays that wish to use it. HDR10 uses something called static metadata, which is consistent across all displays using it and means that light and colour levels have fixed settings, based on the best average values for that content. It forms a baseline standard upon which other HDR standards are built.

HDR10+

HDR10+ is a format pioneered by Samsung and Amazon Video since 2017. It builds on HDR10 by using dynamic metadata instead of static metadata. This allows brightness levels to be adjusted on a frame-by-frame or scene-by-scene basis, potentially delivering greater detail over what HDR10 offers.

Dolby Vision

Developed by Dolby, Dolby Vision also uses dynamic metadata, to give more nuanced control over brightness on a scene-by-scene or frame-by-frame basis. But unlike HDR10 or HDR10+, Dolby Vision also adjusts its metadata to suit the capabilities of the monitor you are using. In other words, by identifying what screen you have, Dolby Vision can then tell it what light and colour levels to use.

While HDR10 and HDR10+ are both open standard – which means any content producer can use it freely – Dolby Vision is proprietary. This means that it’s only available if the content producer paid to use Dolby Vision to create the content, and also depends on what your monitor can actually support in terms of brightness and colour.

Hybrid Log-Gamma (HLG)

Hybrid Log-Gamma is a standard developed jointly by the BBC and Japanese broadcaster NHK. It’s primarily found in use by broadcasters because it doesn’t rely on metadata. Instead, it works by combining the gamma curve used in SDR with an extra logarithmic curve that calculates the extra brightness needed for an HDR display. HLG is an open standard that is also backwards compatible with SDR – making it ideal for broadcasters who don’t then need to transmit separate SDR and HDR content into homes.

Dolby Vision vs HDR10 vs HDR10+

Of these four HDR types, HDR10 is the simplest and most widely used. It is, however, slightly more limited than the others due to its use of static metadata, which limits how bright or dark the image can be. HDR10+ is an upgrade over HDR10, because it uses dynamic metadata, which allows for specific scenes and frames to be adjusted to suit what your monitor can display.

Dolby Vision is arguably superior to either HDR or HDR10+ in terms of the quality of the image it can deliver. Unlike HDR10 and HDR10+, which use a 10-bit colour processor, Dolby Vision used 12-bit, which means a much wider range of brightness and colours is available.

Benefits of HLG

HLG uses a standard dynamic range signal before tacking on some extra information for HDR displays. It doesn’t rely on metadata, which can be particularly beneficial for broadcasters, as it means the data can’t get lost, or out of sync with the picture on the screen (which can lead to errors in the colour and brightness you should be seeing).

Understanding HDR Standards and Certifications

As well as there being several different types of HDR technology, there are also many levels of HDR support provided by monitor manufacturers. And they don’t all give you the same quality of picture. Fortunately, HDR certification brings some semblance of order to what could otherwise be meaningless.

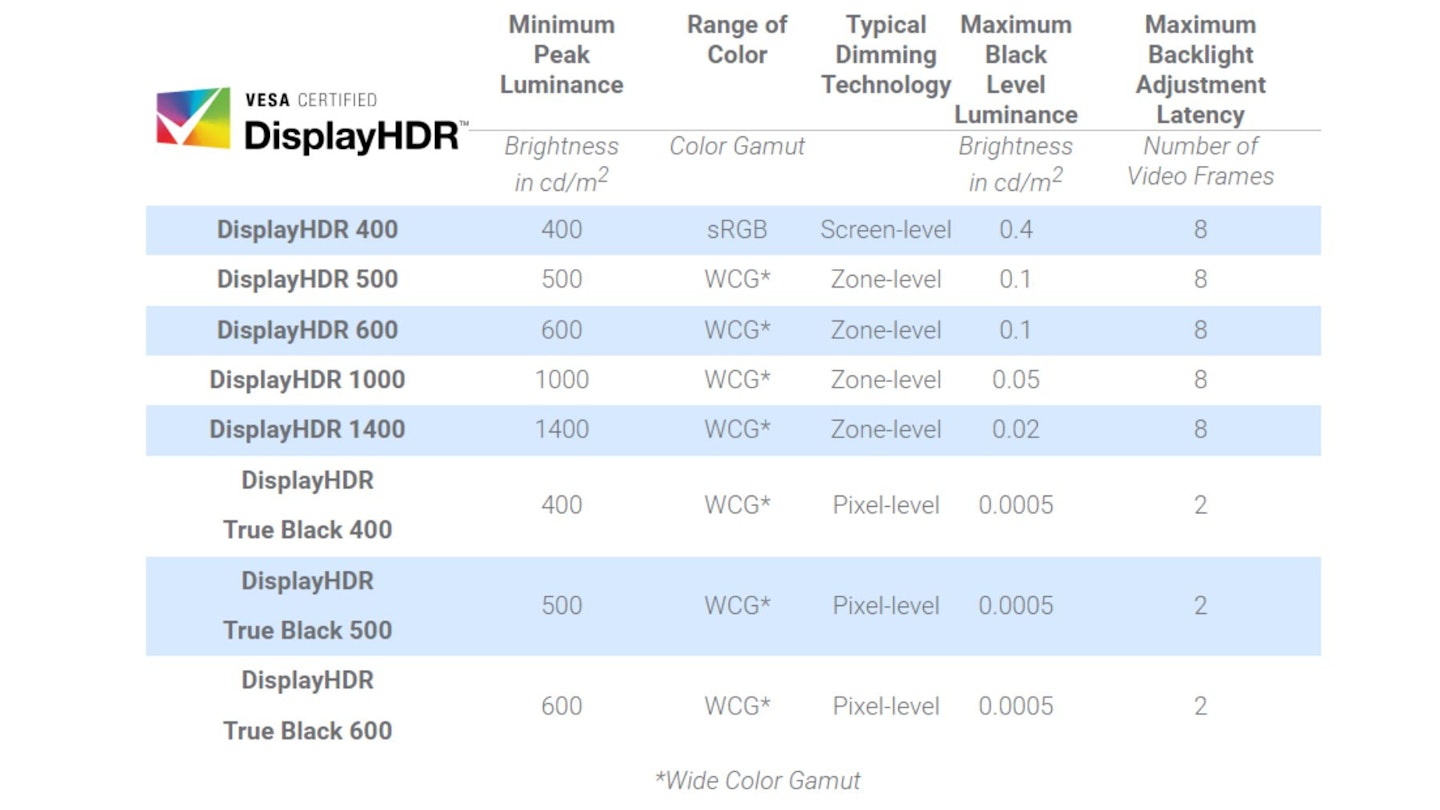

VESA DisplayHDR standard provides a framework by which monitors can be differentiated in terms of their HDR performance. The lowest level of certification is DisplayHDR 400, and the highest level is DisplayHDR 1400. To achieve a particular certification, a monitor has to meet certain benchmark criteria, including brightness, colour gamut, and the type of dimming the monitor uses.

DisplayHDR 400, for example, means the monitor will have a maximum brightness of 400 nits, 8-bit colour gamut, and rely on global dimming to dictate light and dark areas of the screen. DisplayHDR 500, in comparison, has a peak brightness of 500 nits, 10-bit image processing, and local or edge-lit dimming. “True Black” certifications will either use Full Array dimming or they will be OLED displays (which don’t require any backlighting as each LED creates its own level of brightness).

A higher DisplayHDR certification equates to a better overall HDR output, with brighter displays, wider colour gamut, and more dynamic dimming options (DisplayHDR 1000 and above use Full Array dimming, for example).

Certification is essential for ensuring that manufacturers are reliably informing customers of what a particular monitor can do. It’s also important for making sure that you, the consumer, understand why one monitor might be more expensive than another, and what the benefits are of paying the extra (or not).

If you want a “true” HDR experience, then you’ll want a monitor with a minimum of DisplayHDR 500 certification. And for the best experience, DisplayHDR 1000 or above is what you’re looking for. You’ll likely find the price goes up alongside a higher certification.

Considerations When Using HDR

If you want to use your monitor to its full potential, there’s a few other things you’ll need to think about.

HDR content – the content you are consuming needs to be made in HDR. If you’re watching ordinary footage, even the best HDR display will still display it in SDR, because it won’t be told what needs to be brighter or darker. Many games, films and TV shows are now available for HDR consumption. But it’s worth checking before you get started whether it’s HDR or not.

Display cables - if you’ve got yourself a monitor that supports HDR, you’ll want to make sure you have a display cable that’s capable of transferring the data quickly enough to deliver the best output. DisplayPort cables will do the job, if that’s what’s on your monitor, while if you have HDMI, look for HDMI 2.1 to get the best speeds possible.

Graphics cards – your monitor will only let you use HDR if your graphics card in your computer supports HDR. The good news is that virtually any modern graphics card can deliver HDR content. But, if you’re using an older machine, it might be worth checking. Many newer graphics cards also support HDMI 2.1, which is also important to access the HDR content.

Challenges with HDR

As we’ve alluded to already, not all HDR-capable monitors are created equal. Some may only have VESA DisplayHDR 400 certification, which means they aren’t a great deal better than SDR monitors. There are also some monitors that have no VESA certification at all, and simply claim to offer HDR. Without certification, it’s hard to know how good these monitors are. You may find that they offer few, if any, of the features that would earn it VESA certification, and therefore aren’t a true HDR monitor.

You’ll also need to ensure the rest of your setup can support HDR content. While your monitor may be fine, if you don’t have the right cables, or you aren’t watching content that has been developed with HDR support, you won’t be able to enjoy the full HDR experience.

Tips to optimise your HDR settings

Hopefully, you won’t need to do much to get the best picture from your monitor. But, if you feel like the picture isn’t as good as it should be and you want to play around with any of your settings on Windows, here are a few suggestions:

Make sure HDR is on - it might seem obvious, but your system isn’t always set to automatically use HDR. To check this, go to your settings menu, then go to system, then display. Turn on the Use HDR option.

Adjust your brightness settings – if you are finding that some of your content seems overly bright, or too dark, you might want to change the brightness settings. To do this, go to the display settings, and select Use HDR. You’ll see a slider for brightness settings, which you can move up or down until you get the brightness level you want.

Switch off night light – night light is designed to reduce the amount of blue light you are exposed to at night, as blue light has been linked to poorer sleep quality. But because it produces warmer colours, it can upset the colour balance on your monitor. Under the Display settings, select Night light. You can then adjust the slider to change the strength of the night light colouring, or turn it off completely.

Update your graphics drivers – you may have automatic updates scheduled. But if not, go to Windows Update in the Settings menu, to see if there are any updates due for your graphics drivers.

Tweak the refresh rate or resolution - this is another possible solution if you feel the colour balance isn’t quite right on your monitor. You can reduce the refresh rate by going to the Display menu, then choosing Advanced display. If possible, try the 30Hz refresh rate, to see if that makes a difference.

You can also try reducing the resolution by going to the same Advanced display menu and choosing Display adapter properties. Under List All Modes, select and apply the setting that includes 1920 by 1080, 60 Hz.

Is HDR worth it?

Categorically, yes. With an ever-increasing library of games, films and television shows created with HDR in mind, the difference between SDR and HDR is significant. And happily, an increasing number of affordable monitors (and televisions) now support HDR. If you have the budget for an HDR monitor, it really is an essential feature to get the most out of your content.

Steven Shaw is a Senior Digital Writer covering tech and fitness. Steven writes how-to guides, explainers, reviews and best-of listicles covering a wide range of topics. He has several years of experience writing about fitness tech, mobile phones, and gaming.

When Steven isn’t writing, he’s probably testing a new smartwatch or fitness tracker, putting it through its paces with a variety of strength training, HIIT, or yoga. He also loves putting on a podcast and going for a long walk.

Subscribe to the What’s The Best Newsletter to keep up to date with more of the latest reviews and recommendations from the What’s The Best team.